vSAN 8: Architecture and Essential Terminology

This is part of the VMware vSAN guide post series. By using the following link, you can access and explore more objectives from the VMware vSAN study guide.

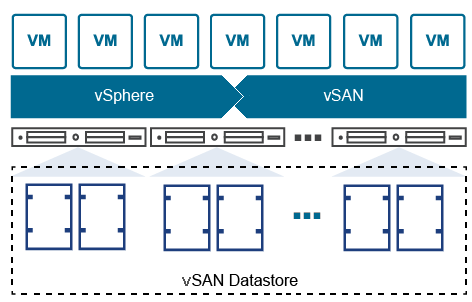

vSAN, or VMware vSAN, as mentioned in the previous post is a software-defined storage solution that operates as part of the ESXi hypervisor. It operates as a kernel-level, object-based storage solution, offering storage capabilities on a per-VM basis. It creates a shared storage pool by aggregating the local or direct-attached capacity devices of a host cluster, which is then shared across all hosts in the vSAN cluster. By eliminating the need for external shared storage, vSAN simplifies storage configuration and virtual machine provisioning.

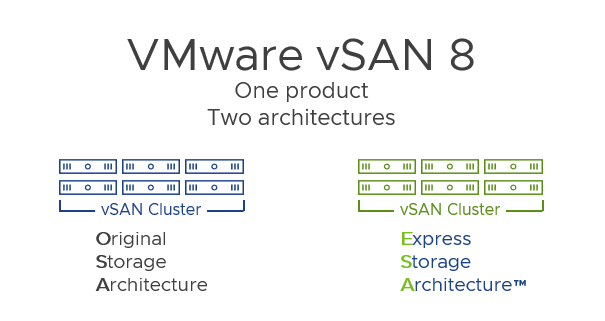

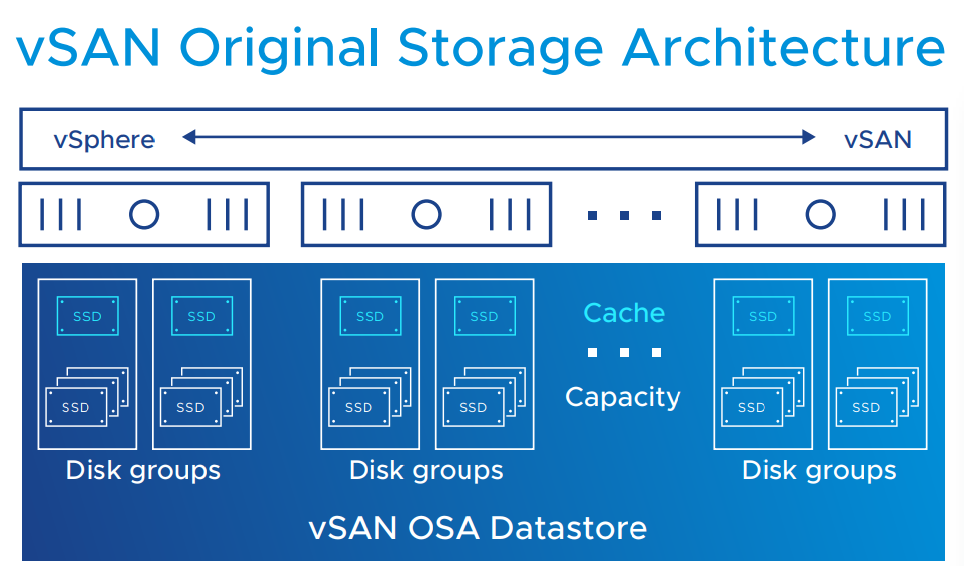

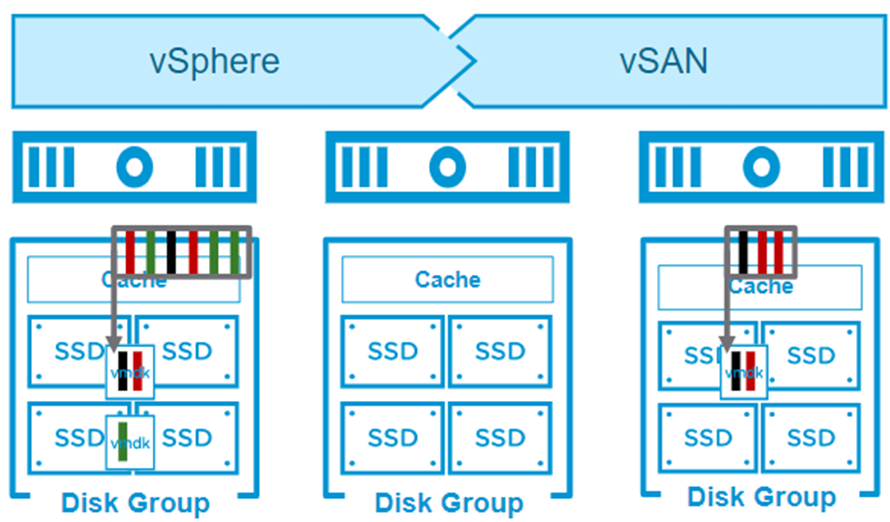

Since its general release in 2014, vSAN has primarily relied on the Original Storage Architecture (OSA), which was designed to deliver highly performant storage with SATA/SAS devices, that were prevalent at that time. In the OSA, each host contributing storage devices to the vSAN datastore must provide at least one device for flash cache and at least one device for capacity. These devices form one or more disk groups, with each disk group comprising a flash cache device and one or multiple capacity devices for persistent storage. The ability to configure multiple disk groups per host enables organizations to maximize performance and capacity.

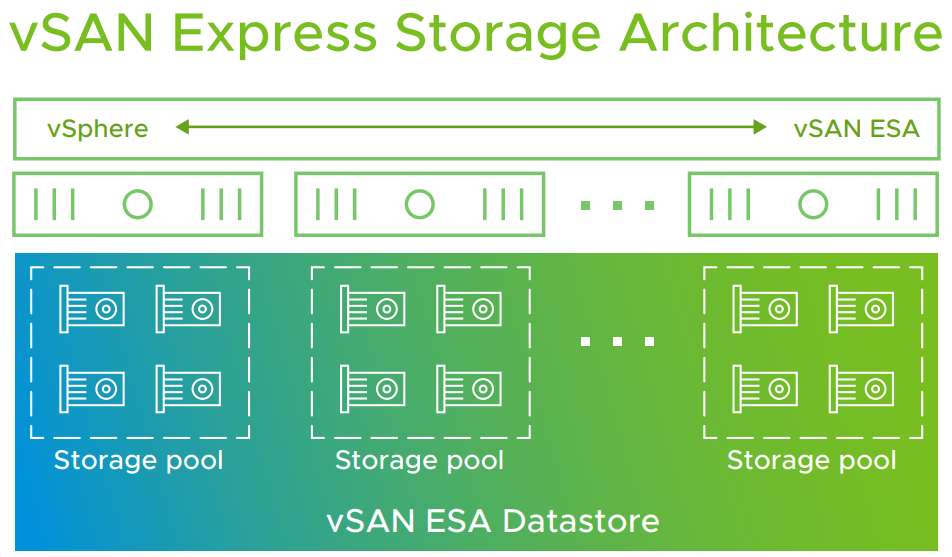

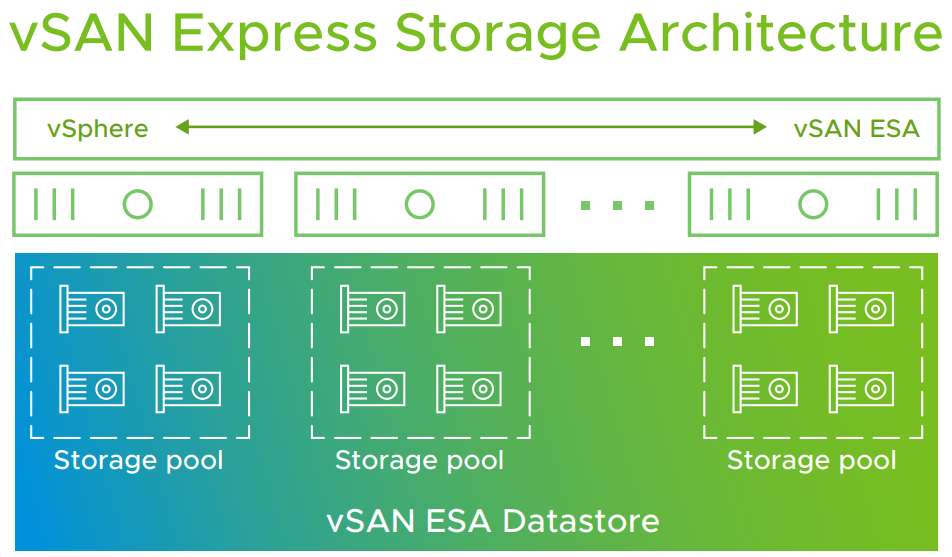

However, with the rapid advancement of hardware technology, VMware recognized the need to introduce a new architecture to harness the full potential of modern storage devices. With the introduction of vSAN 8, a new optional architecture which is called vSAN Express Storage Architecture (ESA) was added to vSAN. ESA is an alternative architecture designed to process and store data with all new levels of efficiency, scalability, and performance. In ESA, all storage devices claimed by vSAN contribute to both capacity and performance. Each host’s storage devices claimed by vSAN collectively form a storage pool, representing the amount of caching and capacity provided by the host to the vSAN datastore.

One significant advantage of ESA is its ability to unlock the capabilities of modern hardware, particularly high-performance NVMe-based TLC flash devices. By optimizing support for such devices, ESA ensures organizations can fully leverage the potential of their storage infrastructure, resulting in improved performance and scalability. This architectural shift enables vSAN to accommodate the evolving storage needs of businesses and offers the flexibility to tailor the architecture to suit specific requirements.

In this post, I will deep dive into these two architectures and provide you with the essential terminology required to effectively work with vSAN

vSAN Orginal Storage Architecture

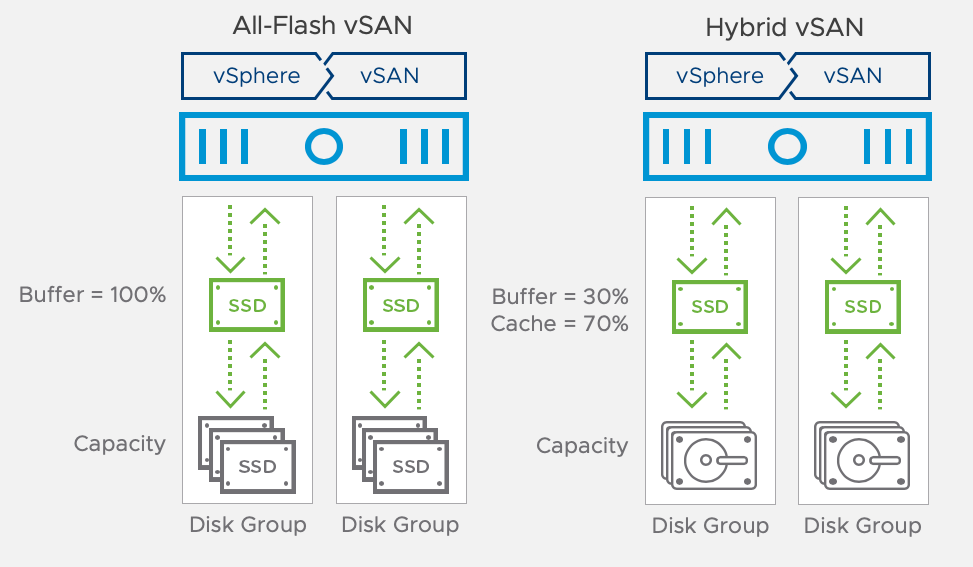

vSAN Original Storage Architecture provides two main configurations: a hybrid configuration that combines flash-based devices with magnetic disks, and an all-flash configuration that utilizes flash technology for both caching and capacity layers.

In the hybrid configuration, server-based flash devices are used to create a cache layer, optimizing performance. At the same time, magnetic disks are employed for capacity and persistent data storage, ensuring a balance between performance and storage capacity. This combination delivers enterprise-level performance and a resilient storage platform for various workloads.

On the other hand, the all-flash configuration takes advantage of flash devices for both caching and capacity, offering higher levels of performance and responsiveness. By utilizing flash storage throughout the entire system, vSAN delivers exceptional performance for demanding applications.

vSAN can be activated on existing host clusters or during the creation of a new cluster, offering flexibility for deployment scenarios. To achieve optimal performance, it is recommended to ensure that all ESXi hosts within the cluster have similar or identical configurations, including storage setups. The devices on the contributing host form one or more disk groups (in the above image, you can observe that each host contributes two disk groups). A disk group comprises one flash cache device and between 1 to 7 capacity devices for persistent storage. The capacity devices can be either flash devices or magnetic disks, depending on the chosen configuration.

In a hybrid configuration, the cache device in vSAN serves a dual purpose as both a read cache (70% of cache device capacity) and a write buffer (30% of cache device capacity). When a block is present in the read cache, vSAN retrieves the data from there to fulfill a read request. However, if there is a read miss and the block is not found in the read cache, vSAN will retrieve the data from the capacity tier and provide it to the requesting application. This ensures efficient utilization of the cache device and minimizes the need to access the slower capacity tier for read operations.

In an all-flash configuration, the entire cache device is dedicated to write buffering. Unlike in the hybrid configuration, there is no read cache available. If a requested block is present in the write buffer, vSAN serves the request directly from there. However, if the block is not found in the write buffer, vSAN reads the data from the capacity tier. Since the capacity tier consists of all-flash storage, the impact of accessing it for read operations is minimal, as flash storage provides faster read speeds compared to traditional hard drives.

By optimizing the utilization of the cache device and leveraging the performance benefits of flash storage, both hybrid and all-flash configurations in vSAN aim to provide efficient and responsive data access for applications while balancing cost-effectiveness and performance requirements.

vSAN Express Storage Architecture

As mentioned before, vSAN ESA (Express Storage Architecture) is a new optional architecture introduced in vSAN 8. The vSAN ESA represents a significant advancement in efficiency, scalability, and performance capabilities compared to the previous storage architecture (OSA) used in earlier versions of vSAN.

It’s important to note that vSAN ESA (Express Storage Architecture) is not intended to replace the current vSAN architecture, which is now referred to as vSAN Original Storage Architecture (OSA). Instead, vSAN ESA is an alternative architecture that builds upon the existing vSAN architecture. Furthermore, vSAN ESA offers upgrades that enable vSAN to leverage the latest server hardware ( NVMe-based flash devices) and protocols, resulting in enhanced capabilities and improved performance.

As you can see in the image above, the new architecture goes beyond traditional concepts such as disk groups, cache, and capacity tiers by using a single-tier pool, which allows all storage devices claimed by vSAN now contribute to both capacity and performance and form a storage pool. Consequently, vSAN can enhance drive serviceability, improve data availability management, and ultimately reduce costs by benefiting all disks simultaneously, unlike the previous architecture. So, this architecture moves the number of supported storage devices from vSAN to the lower tier (host configuration).

This new architecture revolutionizes data processing and storage. It adds a layer to the original architecture, called vSAN Log-Structured File System (LFS), which rapidly and effectively handles incoming data while optimizing it for efficient storage. The vSAN LFS also ensures highly efficient and scalable metadata storage. vSAN LFS writes data in a resilient and space-efficient manner without compromising performance. Additionally, a new optimized log-structured object manager (vSAN Log-Structured Object Manager) and data structure minimize metadata overhead while enabling parallel and efficient data transfer to devices.

For more information about how these two architectures differ in reading and storing data, please read the following post.

vSAN Datastore

After enabling vSAN on a cluster, whether it’s ESA or OSA, a vSAN datastore is created, which appears as another type of datastore alongside Virtual Volume, VMFS, and NFS. It’s important to note that vSAN provides a single datastore accessible to all hosts in the cluster. In OSA architecture only magnetic disks and flash devices used for capacity contribute to the vSAN datastore but in ESA all disks contributes to the vSAN datastore. In vCenter Server, the storage characteristics of the vSAN datastore are presented as a set of capabilities that can be used when defining storage policies for virtual machines. These policies help vSAN determine the optimal placement of virtual machines based on their specific requirements.

Object and Component

As mentioned previously, vSAN is object-based storage, which means storing and managing data in the form of objects, but what is an object?

It is the smallest manageable unit of data in vSAN that allows us to store data with a specific block size, protection level, and stripe size. For instance, each VMDK serves as an object, and you can define the block size, protection level, and stripe size for each individual VMDK. To better understand this concept, let’s consider a typical virtual machine and the files it comprises.

A typical virtual machine includes the following files in the datastore:

- .vmx: Virtual machine configuration file

- .nvrm: Virtual machine BIOS or EFI configuration

- .log: Virtual machine log files

- .vmdk: Virtual machine disk characteristics

- -flat.vmdk Virtual machine data disk

- .vswp: Virtual machine swap file

- .delta-vmdk: This type of VMDK is created when VM snapshots are taken.

- .mem: Virtual machine paging backup file, if snapshot includes a memory option.

There are additional VM files that were not mentioned here, but you can find more information about them in this link. Now, let’s consider each large file, such as VMDK, MEM, and SWAP files, as separate objects and smaller-sized files will be combined together to form a new object known as the VM Home Namespace. Therefore, the current list of objects is as follows:

- VM Home Namespace: is a directory where all VM small files are stored including .nvram, .vmsd, .vmx, .vmx-*, .vswp, .log, and .hlog files.

- VMDK Objects: This is a virtual machine disk that stores the content of the virtual machine’s hard disk drives.

- VM Swap Objects: When a virtual machine is powered on and its memory is not reserved.

- Snapshot Delta VMDK Objects: This type of object is created when VM snapshots are taken.

- Memory Objects: If memory snapshot is selected when you create a snapshot or suspend a VM. The memory object is created and they are identified as .vmem files.

- iSCSI related objects

- vSAN file shares related object

- vSAN performance data objects

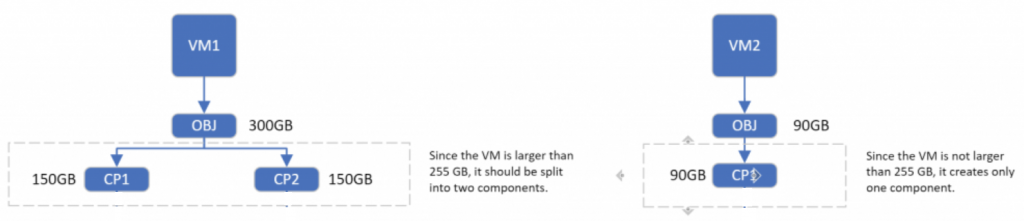

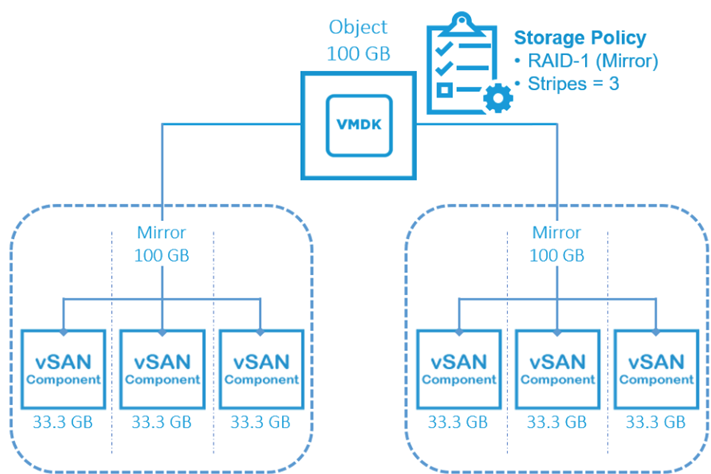

Now that we’ve discussed what an object is, it’s important to understand that each object is composed of one or more components. These components are essentially replicas or segments of the object’s data blocks. In other words, components represent the actual or copies of data blocks that make up an object.

In a vSAN cluster, components are stored on individual hosts. The number of components for an object can vary depending on the configured vSAN storage policy. The storage policy determines the level of data protection and availability required for the object. For example, if objects are greater than 255GB in size, vSAN automatically divides them into multiple components, or depending on the protection level you select in the storage policy, it may involve more components. I will explain in detail about these components and how they are stored in the vSAN datastore at a later stage (Storage Policy).

Storage Policy

I mentioned earlier when using vSAN, the storage characteristics of the vSAN datastore are represented as a set of capabilities. These capabilities allow you to define storage requirements for virtual machines through policies. These policies play a crucial role in determining factors such as performance and availability.

vSAN ensures that all virtual machines on the vSAN datastore have at least one assigned storage policy. If you don’t specify a policy during deployment, vSAN automatically applies a default policy with a Failures to tolerate setting of 1, a single disk stripe per object, and thin provisioned virtual disks. However, it is highly recommended to define your own storage policies, even if they have the same requirements as the default policy. This practice ensures optimal results and allows you to customize the policies according to your specific needs.

By defining your own storage policies, you have better control over the performance and availability of your virtual machines. You can tailor the policies to suit your workload requirements, taking into consideration factors such as redundancy, disk striping, and provisioning options. I will explain the storage policy more at a later stage.

Deduplication and Compression

One of the features that I mentioned in the previous post was the ability of vSAN to leverage advanced caching and data locality techniques to deliver high-performance storage. One technique that vSAN uses to achieve this goal is deduplication and compression. vSAN incorporates deduplication and compression techniques to reduce the storage footprint and optimize resource utilization. Deduplication eliminates redundant data, while compression reduces the size of data blocks. These features help increase usable capacity and improve the overall system performance.

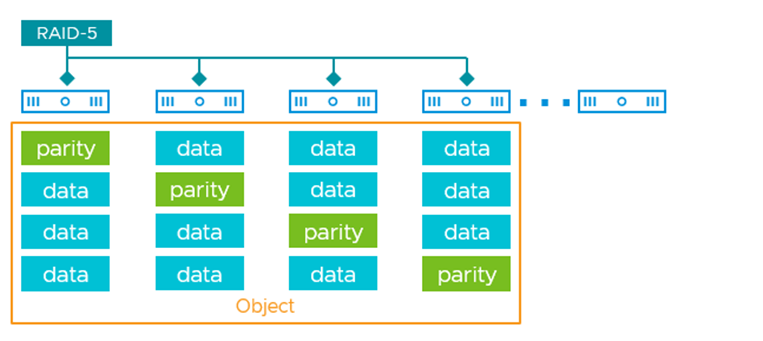

RAID-1 (Mirroring) and RAID-5/6 (Erasure Coding)

vSAN provides different data protection mechanisms. RAID-1 mirroring duplicates data across multiple hosts, ensuring redundancy and high availability. RAID-5/6 erasure coding distributes data with parity information, allowing for efficient use of storage resources while maintaining resiliency.

Conclusion

Knowing the essential terminology for VMware vSAN is crucial to efficiently manage and maximize the benefits of this powerful software-defined storage solution. In this post and the previous one, I explored key terms such as software-defined data centers, software-defined storage, VMware vSAN, disk groups, storage pools, objects, policy-based management, and more. Equipped with this knowledge, you’ll be better prepared to continue this series guide. In the next post, I will go through the types of vSAN deployments. Stay tuned for more in-depth articles on VMware vSAN and other exciting technologies in the future.

References:

https://core.vmware.com/blog/introduction-vsan-express-storage-architecture

https://core.vmware.com/resource/vmware-vsan-design-guide